Why Time Allocation Matters

One of the biggest questions IT leaders wrestle with is: how should my team spend its time?

Lean too far into operations and innovation stalls. Lean too far into projects and the business risks outages, compliance gaps, or unhappy users. The right balance drives both stability and growth.

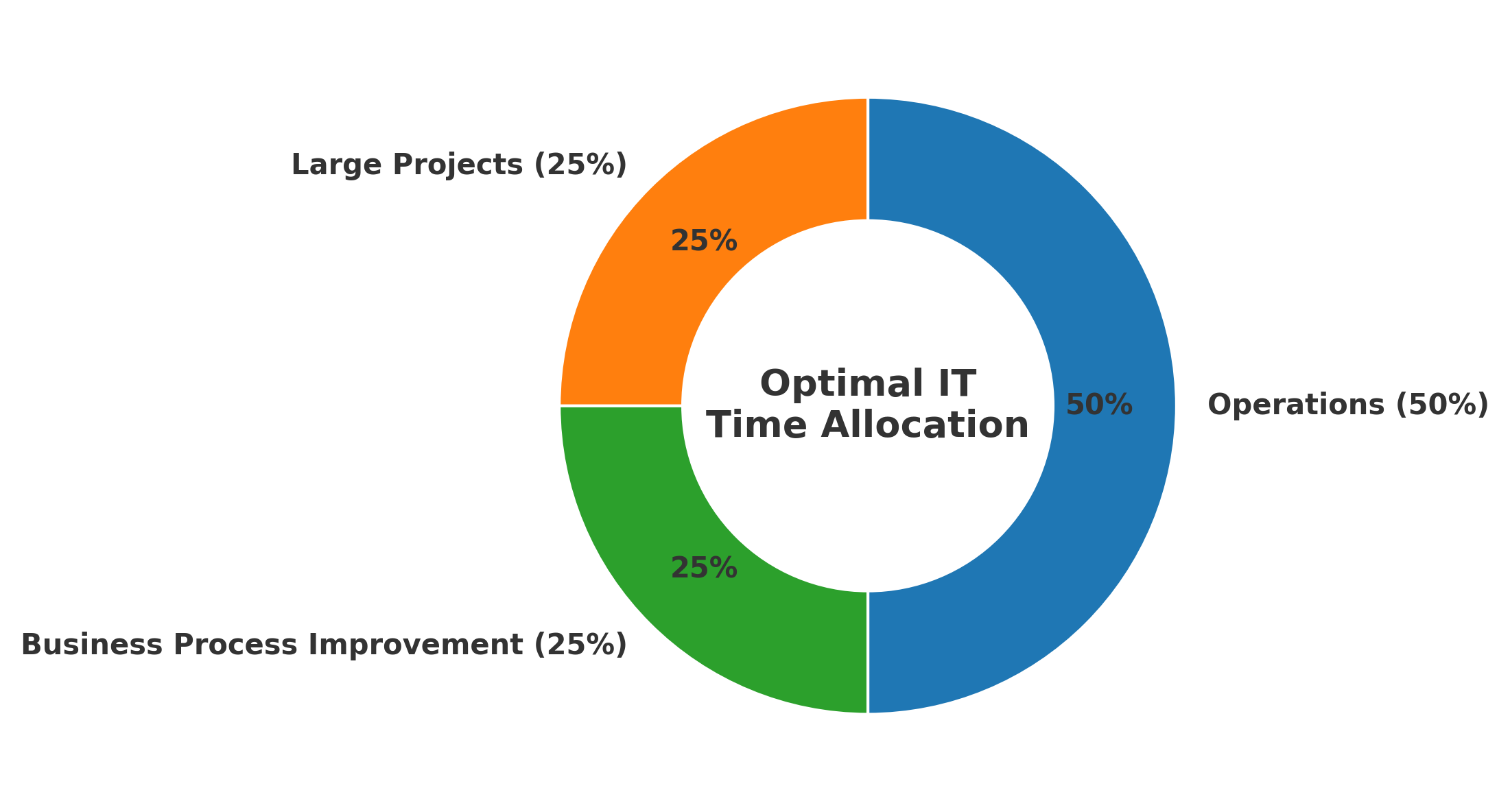

The 50/25/25 Framework

A simple but effective baseline I’ve seen work across organizations:

- 50% Operations — keeping the lights on: uptime, user support, monitoring, patching, compliance, incident response.

- 25% Business Process Improvement (BPI) — workflow optimization, automation, cost reduction, service improvements.

- 25% Large Projects — ERP deployments, cloud migrations, cybersecurity enhancements.

This model ensures IT stays reliable while still investing in efficiency and transformation. It’s not rigid, but it’s a strong starting point.id — priorities shift with business needs, but the model provides a healthy baseline.

Alternative Models for Different Contexts

Every organization is different. These three models adapt the baseline to different business realities:

- Balanced Growth (50/30/20): Mid-sized firms with stable ops, tilting more toward projects for growth.

- Transformation-Heavy (40/45/15): Organizations in the middle of major digital change where large programs dominate.

- Lean Optimization (45/25/30): Companies doubling down on automation, continuous improvement, and reducing technical debt.

The point: time allocation should shift with business maturity and priorities.

Challenges in IT Operations

Even with half the time dedicated, operations remain tough:

- Legacy + new systems that don’t integrate cleanly.

- Limited visibility into assets and services.

- Balancing scalability with security.

- Budget pressure — “do more with less.”

- Rapid change in tools, practices, and skills.

The best IT leaders anticipate these challenges by building automation, resilience, and visibility into their operating model.

Making Business Process Improvement Real

Improvement work only matters if it’s measurable:

- KPIs: response times, error rates, cycle times.

- ROI: cost vs. benefit.

- Feedback loops: employee and customer insights.

- Process metrics: throughput, lead time, defect rates.

If you can’t measure it, it’s not true improvement.

Large IT Projects: Where Time Goes

Typical categories include ERP/CRM deployments, cloud or data center migrations, cybersecurity modernization, and disaster recovery. These demand cross-functional planning and strong governance to deliver.

Digital Transformation Is Continuous

Transformation is no longer “one and done.” It’s baked into every initiative:

- IT uplift: modern infrastructure, cloud, security.

- Digitized operations: AI, RPA, analytics.

- Customer experience: seamless digital touchpoints.

- Workforce transformation: training, new tools, new ways of working.

In pharma and biotech, these shifts accelerate R&D, streamline trials, and strengthen patient engagement.

Talent and Culture Matter

Time allocation isn’t just what IT does—it’s how IT works. Smart leaders carve out time for:

- Upskilling on cloud, AI, and cybersecurity.

- Agile practices and short delivery cycles.

- Knowledge sharing across IT and the business.

Without investing in people and culture, even the best time split won’t deliver full value.

Automation and AI Are Changing the Ratios

Automation is steadily reducing the operational burden. Monitoring, patching, and incident response are increasingly automated—freeing teams to invest more in process improvement and strategic projects.

The 50/25/25 balance could become 40/30/30 in the future.

Running IT by the Numbers

Strong IT management requires metrics:

- Visibility: inventories, monitoring, dashboards.

- KPIs: uptime, MTTR, SLA compliance, cost per incident, project timeliness, user satisfaction.

With these in place, IT shifts from reactive firefighting to proactive strategy.

Conclusion

The 50/25/25 framework provides a healthy starting point, but it’s not a rulebook. The right mix shifts with context—growth, transformation, or optimization. Pair any model with visibility, metrics, culture, and automation, and IT leaders ensure their teams deliver stability today and transformation tomorrow.